使用DeepSeek来构建LangGraph Agent

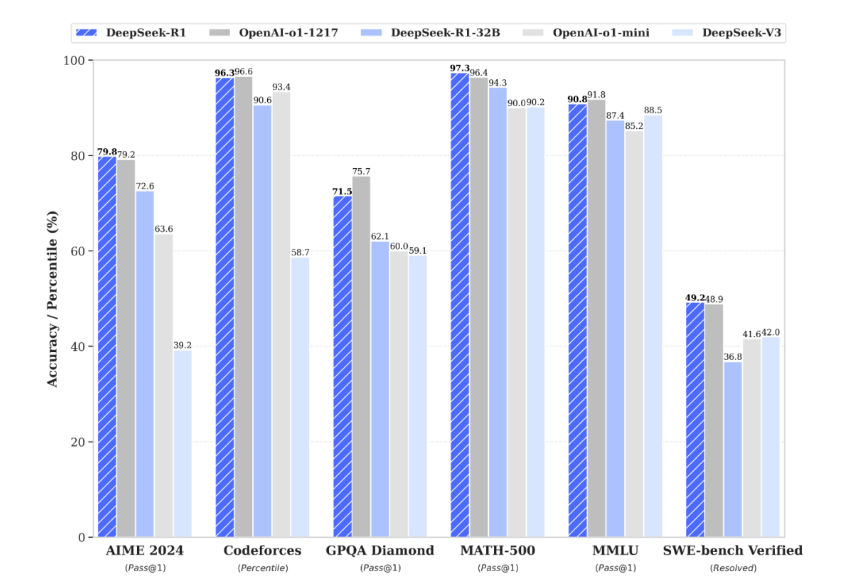

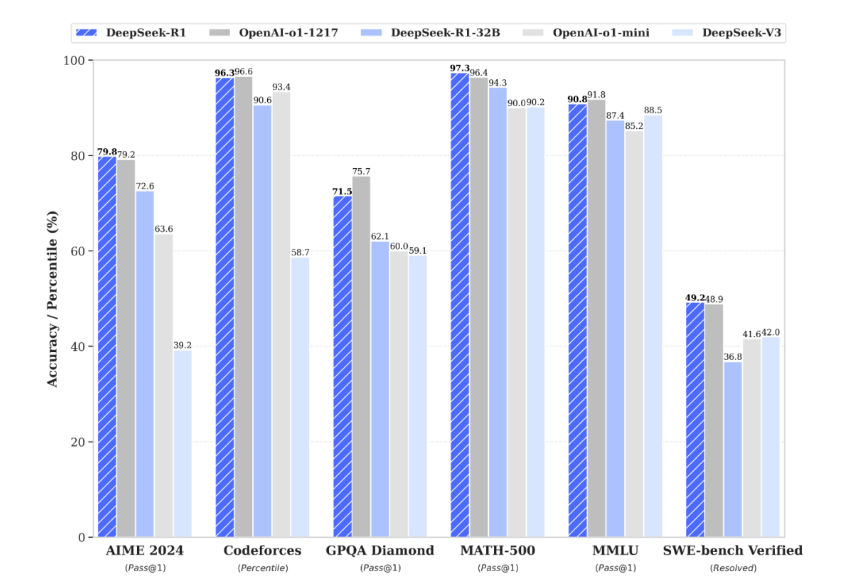

随着的发布,我们不得不把目光聚焦在这个能赶超多个顶流大模型的模型身上,它主要是其在后训练阶段大规模使用了强化学习技术,在仅有极少标注数据的情况下,极大提升了模型推理能力。在数学、代码、自然语言推理等任务上,性能比肩OpenAI o1正式版。为了更好的了解它的性能,我们这篇文章来尝试用它来构建Agent。

随着 Deepseek R1 的发布,我们不得不把目光聚焦在这个能赶超多个顶流大模型的模型身上,它主要是其在后训练阶段大规模使用了强化学习技术,在仅有极少标注数据的情况下,极大提升了模型推理能力。在数学、代码、自然语言推理等任务上,性能比肩 OpenAI o1 正式版。为了更好的了解它的性能,我们这篇文章来尝试用它来构建 Agent。

安装

!pip install -q openai langchain langgraph langchain-openai tavily-python langchain_community langchainhub langchain_experimental arxiv pygraphviz

这些使我们需要提前准备好的库:

import os

os.environ["DEEPSEEK_API_KEY"] = "sk-fad898a58a0f44e7ab705b5ca6df55fe"

os.environ["TAVILY_API_KEY"] = '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'

DEEPSEEK_API_KEY = os.getenv("DEEPSEEK_API_KEY")

from langchain import hub

from langchain_experimental.utilities import PythonREPL

from langchain.agents import AgentExecutor, create_openai_tools_agent

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_openai import ChatOpenAI

from langchain.agents import create_react_agent, load_tools

from langchain.agents import Tool

from langgraph.graph import StateGraph, END

from typing import TypedDict, Annotated

import operator

from langchain_core.messages import AnyMessage, SystemMessage, HumanMessage, ToolMessage

from langchain_openai import ChatOpenAI

from langchain_community.tools.tavily_search import TavilySearchResults

创建Agent

我们开始实现我们的 Agent 工作流。我们定义一个 Agent 里面包含包含模型、工具和可选的系统提示。分别是下面四种参数:

- model: 要使用的语言模型(必须支持工具调用)

- tools: 工具对象列表,每个工具都有name和invoke方法

- system: 可选的系统提示,会被添加到对话开头

- checkpointer: 可选的检查点管理器,用于保存状态,后面我们要加入sqllite来作持久化工具。

class Agent:

def __init__(self, model, tools, system="", checkpointer=None):

self.system = system

# 设置状态机

graph = StateGraph(AgentState)

graph.add_node("llm", self.call_openai)

graph.add_node("action", self.take_action)

graph.add_conditional_edges(

"llm",

self.exists_action,

{True: "action", False: END}

)

graph.add_edge("action", "llm")

graph.set_entry_point("llm")

self.graph = graph.compile(checkpointer=checkpointer)

self.tools = {t.name: t for t in tools}

self.model = model.bind_tools(tools)

def exists_action(self, state: AgentState):

"""检查最后一条消息是否包含任何工具调用。"""

result = state['messages'][-1]

return len(result.tool_calls) > 0

def call_openai(self, state: AgentState):

"""使用当前对话历史调用语言模型。"""

messages = state['messages']

if self.system:

messages = [SystemMessage(content=self.system)] + messages

message = self.model.invoke(messages)

return {'messages': [message]}

def take_action(self, state: AgentState):

"""执行最后一条消息中的所有待处理工具调用。"""

tool_calls = state['messages'][-1].tool_calls

results = []

for t in tool_calls:

print(f"Calling: {t}")

if not t['name'] in self.tools: # check for bad tool name from LLM

print("\n ....bad tool name....")

result = "bad tool name, retry" # instruct LLM to retry if bad

else:

result = self.tools[t['name']].invoke(t['args'])

results.append(ToolMessage(tool_call_id=t['id'], name=t['name'], content=str(result)))

print("Back to the model!")

return {'messages': results}

定义 DeepSeek 模型和工具,最后显示出我们定义的 Agent。

prompt = """You are a smart research assistant. Use the search engine to look up information. \

You are allowed to make multiple calls (either together or in sequence). \

Only look up information when you are sure of what you want. \

If you need to look up some information before asking a follow up question, you are allowed to do that!

"""

## Model

model = ChatOpenAI(

model="deepseek-chat",

api_key=DEEPSEEK_API_KEY,

base_url="https://api.deepseek.com",

temperature=0.0

)

tool = TavilySearchResults(max_results=4)

## Agent

agent = Agent(model, [tool], system=prompt)

from IPython.display import display, Image

display(Image(agent.graph.get_graph().draw_mermaid_png()))

其实在Langchain中定义DeepSeek模型还有一种方式,如下:

from langchain_deepseek import ChatDeepSeek

llm = ChatDeepSeek(

model="...",

temperature=0,

max_tokens=None,

timeout=None,

max_retries=2,

# api_key="...",

# other params...

)

我们推荐用第二种,也是很好的融合了 DeepSeek,我们对其他一些参数做了一些整理,大家可以对照官方API接入。

- model: str 要使用的 DeepSeek 模型名称,例如 “deepseek-chat”。

- temperature: float 采样温度值。

- max_tokens: Optional[int] 生成的最大 token 数量。主要初始化参数 — 客户端参数:

- timeout: Optional[float] 请求超时时间。

- max_retries: int 最大重试次数。

- api_key: Optional[str] DeepSeek API 密钥。如果不传入,将从环境变量 DEEPSEEK_API_KEY 中读取。

得到下面 graph:

调用Agent

我们在上面定义好了Agent的工作流代理系统,接下来,我们开始尝试调用,试一下DeepSeek的使用情况。

messages = [HumanMessage(content="What is the weather in sf?")]

result = agent.graph.invoke({"messages": messages})

print(result)

from IPython.display import Markdown

display(Markdown(result["messages"][-1].content))

我们向Agent提问sf的天气如何,得到下面结果:

Calling: {'name': 'tavily_search_results_json', 'args': {'query': 'weather in San Francisco'}, 'id': 'call_27505e37-86fb-4c4a-857e-51c47ca99227', 'type': 'tool_call'}

Back to the model!

{'messages': [HumanMessage(content='What is the weather in sf?'), AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_27505e37-86fb-4c4a-857e-51c47ca99227', 'function': {'arguments': '{"query": "weather in San Francisco"}', 'name': 'tavily_search_results_json'}, 'type': 'function'}]}, response_metadata={'token_usage': {'completion_tokens': 30, 'prompt_tokens': 239, 'total_tokens': 269}, 'model_name': 'deepseek-chat', 'system_fingerprint': 'fp_57bc6d0d10', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run-a6daab15-d0e8-48c2-89b5-e6d8ded41fae-0', tool_calls=[{'name': 'tavily_search_results_json', 'args': {'query': 'weather in San Francisco'}, 'id': 'call_27505e37-86fb-4c4a-857e-51c47ca99227', 'type': 'tool_call'}], usage_metadata={'input_tokens': 239, 'output_tokens': 30, 'total_tokens': 269}), ToolMessage(content='[{\'url\': \'https://www.weatherapi.com/\', \'content\': "{\'location\': {\'name\': \'San Francisco\', \'region\': \'California\', \'country\': \'United States of America\', \'lat\': 37.78, \'lon\': -122.42, \'tz_id\': \'America/Los_Angeles\', \'localtime_epoch\': 1722146977, \'localtime\': \'2024-07-27 23:09\'}, \'current\': {\'last_updated_epoch\': 1722146400, \'last_updated\': \'2024-07-27 23:00\', \'temp_c\': 14.0, \'temp_f\': 57.1, \'is_day\': 0, \'condition\': {\'text\': \'Cloudy\', \'icon\': \'//cdn.weatherapi.com/weather/64x64/night/119.png\', \'code\': 1006}, \'wind_mph\': 12.3, \'wind_kph\': 19.8, \'wind_degree\': 263, \'wind_dir\': \'W\', \'pressure_mb\': 1013.0, \'pressure_in\': 29.91, \'precip_mm\': 0.0, \'precip_in\': 0.0, \'humidity\': 85, \'cloud\': 82, \'feelslike_c\': 12.4, \'feelslike_f\': 54.3, \'windchill_c\': 12.4, \'windchill_f\': 54.3, \'heatindex_c\': 14.0, \'heatindex_f\': 57.1, \'dewpoint_c\': 11.4, \'dewpoint_f\': 52.5, \'vis_km\': 10.0, \'vis_miles\': 6.0, \'uv\': 1.0, \'gust_mph\': 17.0, \'gust_kph\': 27.4}}"}, {\'url\': \'https://en.climate-data.org/north-america/united-states-of-america/california/san-francisco-385/t/july-7/\', \'content\': \'San Francisco Weather in July Are you planning a holiday with hopefully nice weather in San Francisco in July 2024? Here you can find all information about the weather in San Francisco in July: ... 28. July: 16 °C | 61 °F : 22 °C | 71 °F: 13 °C | 55 °F: 14 °C | 57 °F: 0.1 mm | 0.0 inch. 29. July\'}, {\'url\': \'https://www.almanac.com/weather/longrange/CA/San Francisco\', \'content\': \'60-Day Extended Weather Forecast for San Francisco, CA. ... Free 2-Month Weather Forecast. July 2024 Long Range Weather Forecast for Pacific Southwest; Dates Weather Conditions ... Jul 16-27: Sunny, hot inland; AM clouds, PM sun, warm coast: Jul 28-31: Sunny, warm: July: temperature 74° (2° above avg.) precipitation 0.1" (0.1" above avg ...\'}, {\'url\': \'https://www.weathertab.com/en/c/e/07/united-states/california/san-francisco/\', \'content\': \'Explore comprehensive July 2024 weather forecasts for San Francisco, including daily high and low temperatures, precipitation risks, and monthly temperature trends. Featuring detailed day-by-day forecasts, dynamic graphs of daily rain probabilities, and temperature trends to help you plan ahead. ... 28 66°F 53°F 19°C 12°C 04% 29 66°F 53°F ...\'}]', name='tavily_search_results_json', tool_call_id='call_27505e37-86fb-4c4a-857e-51c47ca99227'), AIMessage(content='The current weather in San Francisco is as follows:\n- Temperature: 14°C (57.1°F)\n- Condition: Cloudy\n- Wind: 12.3 mph (19.8 kph) from the W\n- Humidity: 85%\n- Feels like: 12.4°C (54.3°F)\n- Visibility: 10.0 km (6.0 miles)\n- UV: 1.0\n- Gust: 17.0 mph (27.4 kph)\n\nFor the upcoming days, the forecast indicates:\n- July 28: 16°C (61°F) low, 22°C (71°F) high, with a 0.1 mm (0.0 inch) of precipitation expected.\n- The extended forecast suggests warm conditions with temperatures around 74°F (2° above average) and 0.1" (0.1" above average) of precipitation for the month of July.', response_metadata={'token_usage': {'completion_tokens': 232, 'prompt_tokens': 1196, 'total_tokens': 1428}, 'model_name': 'deepseek-chat', 'system_fingerprint': 'fp_57bc6d0d10', 'finish_reason': 'stop', 'logprobs': None}, id='run-3192481b-d6dc-4bb7-b998-c6e2e25068e0-0', usage_metadata={'input_tokens': 1196, 'output_tokens': 232, 'total_tokens': 1428})]}

The current weather in San Francisco is as follows:

Temperature: 14°C (57.1°F)

Condition: Cloudy

Wind: 12.3 mph (19.8 kph) from the W

Humidity: 85%

Feels like: 12.4°C (54.3°F)

Visibility: 10.0 km (6.0 miles)

UV: 1.0

Gust: 17.0 mph (27.4 kph)

For the upcoming days, the forecast indicates:

July 28: 16°C (61°F) low, 22°C (71°F) high, with a 0.1 mm (0.0 inch) of precipitation expected.

The extended forecast suggests warm conditions with temperatures around 74°F (2° above average) and 0.1" (0.1" above average) of precipitation for the month of July.

可以看到模型非常准确的回答出了天气,然后再提问

messages = [HumanMessage(content="What is the weather in SF and LA?")]

result = agent.graph.invoke({"messages": messages})

display(Markdown(result["messages"][-1].content))

得到下面结果

Calling: {'name': 'tavily_search_results_json', 'args': {'query': 'weather in SF and LA'}, 'id': 'call_bf6e9994-65fa-4a5b-a9b9-49fb0df927d6', 'type': 'tool_call'}

Back to the model!

Here is the weather information for San Francisco (SF) and Los Angeles (LA):

San Francisco, CA Daily Weather Forecast for July 2024:

July 28 (Sun): 04% chance of rain, sunrise at 6:09 AM, sunset at 8:21 PM, last quarter moon.

July 29 (Mon): 06% chance of rain.

Los Angeles (United States) weather in July 2024:

The forecast indicates typical weather conditions for July, with temperatures expected to be around 21°C.

Please note that the specific temperature details for Los Angeles are not provided in the search results, but it suggests typical July conditions. For more detailed and current weather information, it's recommended to check a reliable weather forecasting service.

DeepSeek模型的回答依旧完美,最后我们试试多问几个问题

messages = [HumanMessage(content= "Who won the super bowl in 2024? In what state is the winning team headquarters located? \

What is the GDP of that state? Answer each question.")]

result = agent.graph.invoke({"messages": messages})

display(Markdown(result["messages"][-1].content))

依旧发挥稳定,得到下面结果

Calling: {'name': 'tavily_search_results_json', 'args': {'query': 'Super Bowl 2024 winner'}, 'id': 'call_2984ac45-b886-4662-a8a9-d0209e3af9e8', 'type': 'tool_call'}

Calling: {'name': 'tavily_search_results_json', 'args': {'query': "GDP of the state where the Super Bowl 2024 winner's headquarters are located"}, 'id': 'call_f437810c-8059-4284-b539-801a38bb4a80', 'type': 'tool_call'}

Back to the model!

The Kansas City Chiefs won the Super Bowl in 2024. The Chiefs' headquarters are located in Kansas City, Missouri. According to the W.P. Carey School of Business at Arizona State University, the celebrations surrounding the Super Bowl contributed a total of $726.1 million to the Arizona gross domestic product (GDP). However, this figure pertains to the economic impact of the Super Bowl in Arizona, not Missouri. For specific GDP data related to Missouri, additional research would be required.

持久化消息到 Sqlite

为了通过检查点得到消息记忆,我用 Sqlite 来持久化,首先初始化Sqlite :

from langgraph.checkpoint.sqlite import SqliteSaver

memory = SqliteSaver.from_conn_string(":memory:")

## 把memory传给我们Agnt

agent = Agent(model, [tool], system=prompt, checkpointer=memory)

开始调用Agent,我们连续询问 Agent 两个问题,最后问一下上面两个问题的综合判断,看一下Agent的返回情况:

messages = [HumanMessage(content="What is the weather in sf?")]

thread = {"configurable": {"thread_id": "1"}}

for event in agent.graph.stream({"messages": messages}, thread):

for v in event.values():

print(v['messages'])

messages = [HumanMessage(content="How about in LA?")]

for event in agent.graph.stream({"messages": messages}, thread):

for v in event.values():

print(v['messages'])

messages = [HumanMessage(content="Which one is warmer?")]

for event in agent.graph.stream({"messages": messages}, thread):

for v in event.values():

print(v)

得到最终结果:

{'messages': [AIMessage(content='Based on the current weather data:\n\n- San Francisco: 14°C (57.1°F)\n- Los Angeles: 22.3°C (72.1°F)\n\nLos Angeles is warmer than San Francisco.', response_metadata={'token_usage': {'completion_tokens': 56, 'prompt_tokens': 2527, 'total_tokens': 2583}, 'model_name': 'deepseek-chat', 'system_fingerprint': 'fp_57bc6d0d10', 'finish_reason': 'stop', 'logprobs': None}, id='run-8f545114-44ce-444e-900b-7788ab70c4fa-0', usage_metadata={'input_tokens': 2527, 'output_tokens': 56, 'total_tokens': 2583})]}

结果就是我们想要的,可以看出来通过LangGraph+DeepSeek非常完美的契合了,并且构造出强大的Agent。

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)